Classification of events into possible, probable and random. The concepts of simple and complex elementary events. Operations on events. The classical definition of the probability of a random event and its properties. Elements of combinatorics in probability theory. geometric probability. Axioms of the theory of probability.

One of the basic concepts of probability theory is the concept of an event. Under event understand any fact that may come about as a result of experience or trial. Under experience , or test , is understood as the implementation of a certain set of conditions.

Event examples:

- - hitting the target when firing from a gun (experience - the product of a shot; event - hitting the target);

- - loss of two coats of arms during a three-time toss of a coin (experience - three times toss of a coin; event - loss of two coats of arms);

- - the appearance of a measurement error within the specified limits when measuring the distance to the target (experience - distance measurement; event - measurement error).

Countless such examples could be cited. Events are indicated by capital letters of the Latin alphabets A,B,C etc.

Distinguish joint events And incompatible . Events are called joint if the occurrence of one of them does not exclude the occurrence of the other. Otherwise, the events are called incompatible. For example, two dice are tossed. Event AA is a roll of three points on the first die, event B is a roll of three points on the second die. A and B are joint events.

Let the store receive a batch of shoes of the same style and size, but of a different color. Event A - a box taken at random will be with black shoes, event B - the box will be with brown shoes, A and B are incompatible events.

The event is called authentic if it necessarily occurs under the conditions of the given experiment.

An event is said to be impossible if it cannot occur under the conditions of the given experience. For example, the event that a standard part is taken from a batch of standard parts is certain, but a non-standard part is impossible.

The event is called possible , or random , if as a result of experience it may or may not appear. An example of a random event is the identification of product defects during the control of a batch of finished products, the discrepancy between the size of the processed product and the given one, the failure of one of the links of the automated control system.

The events are called equally possible if, under the conditions of the test, none of these events is objectively more likely than the others. For example, suppose a store is supplied with light bulbs (and in equal quantities) by several manufacturers. Events consisting in buying a light bulb from any of these factories are equally probable.

An important concept is full group of events . Several events in a given experiment form a complete group if at least one of them necessarily appears as a result of the experiment. For example, there are ten balls in an urn, of which six are red and four are white, five of which are numbered.

A - the appearance of a red ball with one extraction,

B - the appearance of a white ball,

C - the appearance of the ball with the number. Events A,B,C form a complete group of joint events.

Let us introduce the concept of an opposite, or additional, event. Under opposite event

AЇ is understood as an event that must necessarily occur if some event has not occurred

A. Opposite events are incompatible and the only possible ones. They form a complete group of events.

Events and their classification

Basic concepts of probability theory

When constructing any mathematical theory, first of all, the simplest concepts are distinguished, which are accepted as initial facts. Such basic concepts in probability theory are the concept random experiment, a random event, the probability of a random event.

random experimentis the process of registering an observation of an event of interest to us, which is carried out under the condition of a given stationary (not changing in time) a real set of conditions, including the inevitability of the influence of a large number of random (not amenable to strict accounting and control) factors.

These factors, in turn, do not allow us to draw completely reliable conclusions about whether the event of interest to us will occur or not occur. At the same time, it is assumed that we have the fundamental possibility (at least mentally realizable) of repeated repetition of our experiment or observation within the same set of conditions.

Here are some examples of random experiments.

1. A random experiment consisting of tossing a perfectly symmetrical coin includes such random factors as the force with which the coin is thrown, the trajectory of the flight of the coin, the initial velocity, the moment of rotation, etc. These random factors make it impossible to accurately determine the outcome of each individual test: "a coat of arms will appear when a coin is tossed" or "tails will appear when a coin is tossed."

2. The plant "Stalkanat" tests the manufactured cables for the maximum allowable load. The load varies within certain limits from one experiment to another. This is due to such random factors as micro defects in the material from which the cables are made, various interferences in the operation of equipment that occur during the production of cables, storage conditions, the mode of conducting experiments, etc.

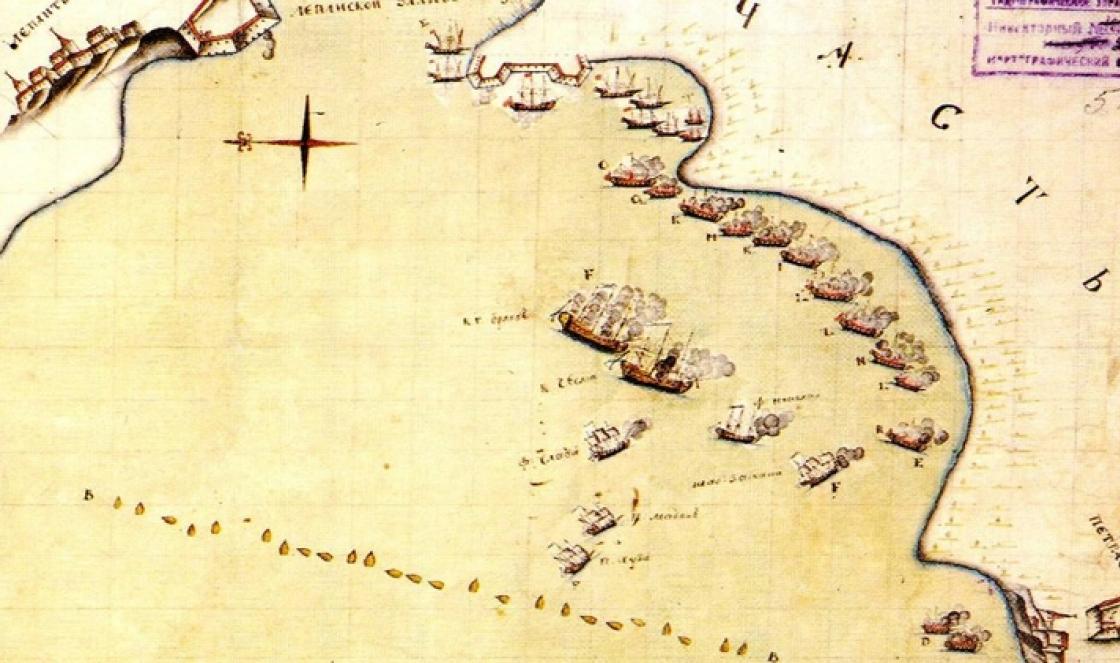

3. A series of shots are fired from the same gun at a specific target. Hitting the target depends on many random factors, which include the condition of the gun and projectile, the installation of the gun, the skill of the gunner, weather conditions (wind, lighting, etc.).

Definition. The implementation of a certain set of conditions is called test. The test result is called event.

Random events are denoted by capital letters of the Latin alphabet: A, B, C... or a capital letter with an index: .

For example, passing an exam under the implementation of a given set of conditions (a written exam, including rating system grades, etc.) is a test for the student, and getting a certain grade is an event;

carrying out a shot from a gun under a given set of conditions (weather conditions, the state of the gun, etc.) is a test, and hitting or missing a target is an event.

We can repeat the same experiment many times under the same conditions. The presence of a large number of random factors characterizing the conditions for conducting each such experiment makes it impossible to make a completely definite conclusion about whether the event of interest to us will occur or not occur in a separate test. Note that such a problem is not posed in probability theory.

Event classification

Events happen reliable, impossible And random.

Definition. The event is called authentic if, under a given set of conditions, it necessarily occurs.

All reliable events are indicated by a letter (the first letter of the English word universal- general)

Examples of certain events are: the appearance of a white ball from an urn containing only white balls; win in a win-win lottery.

Definition. The event is called impossible if under a given set of conditions it cannot occur.

All impossible events are denoted by the letter .

For example, in Euclidean geometry, the sum of the angles of a triangle cannot be greater than , you cannot get a grade of "6" in an exam with a five-point grading system.

Definition. The event is called random, if it can appear or not appear under a given set of conditions.

For example, random events are: the event of the appearance of an ace from a deck of cards; event winning a football team match; event winning in the money and clothing lottery; event buying a defective TV, etc.

Definition. Events called incompatible if the occurrence of one of these events excludes the occurrence of any other.

Example 1 If we consider the test, which consists in tossing a coin, then the events - the appearance of the coat of arms and - the appearance of the number - are incompatible events.

Definition. Events called joint, if the occurrence of one of these events does not exclude the occurrence of other events.

Example 2 If a shot is fired from three guns, then the following events are joint: hit from the first gun; hit from the second gun; hit from the third gun.

Definition. Events called the only possible, if at least one of the given events must necessarily occur during the implementation of a given set of conditions.

Example 3 When a die is rolled, the following are the only possible events:

A 1 - the appearance of one point,

A 2 - the appearance of two points,

A 3 - the appearance of three points,

A 4 - the appearance of four points,

A 5 - the appearance of five points,

A 6 - the appearance of six points.

Definition. They say that events form full group of events if these events are the only possible and incompatible ones.

The events that were considered in examples 1, 3 form a complete group, since they are incompatible and the only possible ones.

Definition. Two events that form a complete group are called opposite.

If is some event, then the opposite event is denoted by .

Example 4 If the event is a coat of arms, then the event is tails.

Opposite events are also: “the student passed the exam” and “the student did not pass the exam”, “the plant fulfilled the plan” and “the plant did not fulfill the plan”.

Definition. Events called equiprobable or equally possible if, during the test, they all objectively have the same chance of appearing.

Note that equally likely events can only appear in experiments with outcome symmetry, which is ensured by special methods (for example, making absolutely symmetrical coins, dice, careful shuffling of cards, dominoes, mixing balls in an urn, etc.).

Definition. If the outcomes of some test are uniquely possible, incompatible and equally possible, then they are called elementary outcomes, cases or chances, and the test itself is called case chart or "urn scheme"(since any probabilistic problem for the test under consideration can be replaced by an equivalent problem with urns and balls of different colors) .

Example 5 If there are 3 white and 3 black balls in the urn that are identical to the touch, then the event A 1 - the appearance of a white ball and an event A 2 - the appearance of a black ball are equiprobable events.

Definition. They say that the event favors event or event entails event , if at the appearance event is sure to come.

If an event entails an event , then this is denoted by the symbols equivalent or equivalent and denote

Thus, equivalent events and at each trial either both occur or both do not occur.

To build a probability theory, in addition to the already introduced basic concepts (random experiment, random event), it is necessary to introduce one more basic concept - probability of a random event.

Note that ideas about the probability of an event have changed in the course of the development of probability theory. Let us trace the history of the development of this concept.

Under probability random event understand the measure of the objective possibility of the occurrence of an event.

This definition reflects the concept of probability from a qualitative point of view. It was known in the ancient world.

The quantitative definition of the probability of an event was first given in the works of the founders of the theory of probability, who considered random experiments that have symmetry or an objective equiprobability of outcomes. Such random experiments, as noted above, most often include artificially organized experiments in which special methods are taken to ensure the equal chance of outcomes (shuffling cards or dominoes, making perfectly symmetrical dice, coins, etc.). With regard to such random experiments in the seventeenth century. The French mathematician Laplace formulated the classical definition of probability.

Initially, being just a collection of information and empirical observations of the game of dice, the theory of probability has become a solid science. Fermat and Pascal were the first to give it a mathematical framework.

From reflections on the eternal to the theory of probability

Two individuals to whom the theory of probability owes many fundamental formulas, Blaise Pascal and Thomas Bayes, are known as deeply religious people, the latter was a Presbyterian minister. Apparently, the desire of these two scientists to prove the fallacy of the opinion about a certain Fortune, bestowing good luck on her favorites, gave impetus to research in this area. After all, in fact, any game of chance, with its wins and losses, is just a symphony of mathematical principles.

Thanks to the excitement of the Chevalier de Mere, who was equally a gambler and a person who was not indifferent to science, Pascal was forced to find a way to calculate the probability. De Mere was interested in this question: "How many times do you need to throw two dice in pairs so that the probability of getting 12 points exceeds 50%?". The second question that interested the gentleman extremely: "How to divide the bet between the participants in the unfinished game?" Of course, Pascal successfully answered both questions of de Mere, who became the unwitting initiator of the development of the theory of probability. It is interesting that the person of de Mere remained known in this area, and not in literature.

Previously, no mathematician has yet made an attempt to calculate the probabilities of events, since it was believed that this was only a guesswork solution. Blaise Pascal gave the first definition of the probability of an event and showed that this is a specific figure that can be justified mathematically. Probability theory has become the basis for statistics and is widely used in modern science.

What is randomness

If we consider a test that can be repeated an infinite number of times, then we can define a random event. This is one of the possible outcomes of the experience.

Experience is the implementation of specific actions in constant conditions.

In order to be able to work with the results of experience, events are usually denoted by the letters A, B, C, D, E ...

Probability of a random event

To be able to proceed to the mathematical part of probability, it is necessary to define all its components.

The probability of an event is a numerical measure of the possibility of the occurrence of some event (A or B) as a result of an experience. The probability is denoted as P(A) or P(B).

Probability theory is:

- reliable the event is guaranteed to occur as a result of the experiment Р(Ω) = 1;

- impossible the event can never happen Р(Ø) = 0;

- random the event lies between certain and impossible, that is, the probability of its occurrence is possible, but not guaranteed (the probability of a random event is always within 0≤P(A)≤1).

Relationships between events

Both one and the sum of events A + B are considered when the event is counted in the implementation of at least one of the components, A or B, or both - A and B.

In relation to each other, events can be:

- Equally possible.

- compatible.

- Incompatible.

- Opposite (mutually exclusive).

- Dependent.

If two events can happen with equal probability, then they equally possible.

If the occurrence of event A does not nullify the probability of occurrence of event B, then they compatible.

If events A and B never occur at the same time in the same experiment, then they are called incompatible. Tossing a coin is a good example: coming up tails is automatically not coming up heads.

The probability for the sum of such incompatible events consists of the sum of the probabilities of each of the events:

P(A+B)=P(A)+P(B)

If the occurrence of one event makes the occurrence of another impossible, then they are called opposite. Then one of them is designated as A, and the other - Ā (read as "not A"). The occurrence of event A means that Ā did not occur. These two events form a complete group with a sum of probabilities equal to 1.

Dependent events have mutual influence, decreasing or increasing each other's probability.

Relationships between events. Examples

It is much easier to understand the principles of probability theory and the combination of events using examples.

The experiment that will be carried out is to pull the balls out of the box, and the result of each experiment is an elementary outcome.

An event is one of the possible outcomes of an experience - a red ball, a blue ball, a ball with the number six, etc.

Test number 1. There are 6 balls, three of which are blue with odd numbers, and the other three are red with even numbers.

Test number 2. There are 6 blue balls with numbers from one to six.

Based on this example, we can name combinations:

- Reliable event. In Spanish No. 2, the event "get the blue ball" is reliable, since the probability of its occurrence is 1, since all the balls are blue and there can be no miss. Whereas the event "get the ball with the number 1" is random.

- Impossible event. In Spanish No. 1 with blue and red balls, the event "get the purple ball" is impossible, since the probability of its occurrence is 0.

- Equivalent events. In Spanish No. 1, the events “get the ball with the number 2” and “get the ball with the number 3” are equally likely, and the events “get the ball with an even number” and “get the ball with the number 2” have different probabilities.

- Compatible events. Getting a six in the process of throwing a die twice in a row are compatible events.

- Incompatible events. In the same Spanish No. 1 events "get the red ball" and "get the ball with an odd number" cannot be combined in the same experience.

- opposite events. The most striking example of this is coin tossing, where drawing heads is the same as not drawing tails, and the sum of their probabilities is always 1 (full group).

- Dependent events. So, in Spanish No. 1, you can set yourself the goal of extracting a red ball twice in a row. Extracting it or not extracting it the first time affects the probability of extracting it the second time.

It can be seen that the first event significantly affects the probability of the second (40% and 60%).

Event Probability Formula

The transition from fortune-telling to exact data occurs by transferring the topic to the mathematical plane. That is, judgments about a random event like "high probability" or "minimum probability" can be translated to specific numerical data. It is already permissible to evaluate, compare and introduce such material into more complex calculations.

From the point of view of calculation, the definition of the probability of an event is the ratio of the number of elementary positive outcomes to the number of all possible outcomes of experience with respect to a particular event. Probability is denoted by P (A), where P means the word "probability", which is translated from French as "probability".

So, the formula for the probability of an event is:

Where m is the number of favorable outcomes for event A, n is the sum of all possible outcomes for this experience. The probability of an event is always between 0 and 1:

0 ≤ P(A) ≤ 1.

Calculation of the probability of an event. Example

Let's take Spanish. No. 1 with balls, which is described earlier: 3 blue balls with numbers 1/3/5 and 3 red balls with numbers 2/4/6.

Based on this test, several different tasks can be considered:

- A - red ball drop. There are 3 red balls, and there are 6 options in total. This is the simplest example, in which the probability of an event is P(A)=3/6=0.5.

- B - dropping an even number. There are 3 (2,4,6) even numbers in total, and the total number of possible numerical options is 6. The probability of this event is P(B)=3/6=0.5.

- C - loss of a number greater than 2. There are 4 such options (3,4,5,6) out of the total number of possible outcomes 6. The probability of the event C is P(C)=4/6=0.67.

As can be seen from the calculations, event C has a higher probability, since the number of possible positive outcomes is higher than in A and B.

Incompatible events

Such events cannot appear simultaneously in the same experience. As in Spanish No. 1, it is impossible to get a blue and a red ball at the same time. That is, you can get either a blue or a red ball. In the same way, an even and an odd number cannot appear in a die at the same time.

The probability of two events is considered as the probability of their sum or product. The sum of such events A + B is considered to be an event that consists in the appearance of an event A or B, and the product of their AB - in the appearance of both. For example, the appearance of two sixes at once on the faces of two dice in one throw.

The sum of several events is an event that implies the occurrence of at least one of them. The product of several events is the joint occurrence of them all.

In probability theory, as a rule, the use of the union "and" denotes the sum, the union "or" - multiplication. Formulas with examples will help you understand the logic of addition and multiplication in probability theory.

Probability of the sum of incompatible events

If the probability of incompatible events is considered, then the probability of the sum of events is equal to the sum of their probabilities:

P(A+B)=P(A)+P(B)

For example: we calculate the probability that in Spanish. No. 1 with blue and red balls will drop a number between 1 and 4. We will calculate not in one action, but by the sum of the probabilities of the elementary components. So, in such an experiment there are only 6 balls or 6 of all possible outcomes. The numbers that satisfy the condition are 2 and 3. The probability of getting the number 2 is 1/6, the probability of the number 3 is also 1/6. The probability of getting a number between 1 and 4 is:

The probability of the sum of incompatible events of a complete group is 1.

So, if in the experiment with a cube we add up the probabilities of getting all the numbers, then as a result we get one.

This is also true for opposite events, for example, in the experiment with a coin, where one of its sides is the event A, and the other is the opposite event Ā, as is known,

Р(А) + Р(Ā) = 1

Probability of producing incompatible events

Multiplication of probabilities is used when considering the occurrence of two or more incompatible events in one observation. The probability that events A and B will appear in it at the same time is equal to the product of their probabilities, or:

P(A*B)=P(A)*P(B)

For example, the probability that in No. 1 as a result of two attempts, a blue ball will appear twice, equal to

That is, the probability of an event occurring when, as a result of two attempts with the extraction of balls, only blue balls will be extracted, is 25%. It is very easy to do practical experiments on this problem and see if this is actually the case.

Joint Events

Events are considered joint when the appearance of one of them can coincide with the appearance of the other. Despite the fact that they are joint, the probability of independent events is considered. For example, throwing two dice can give a result when the number 6 falls on both of them. Although the events coincided and appeared at the same time, they are independent of each other - only one six could fall out, the second die has no influence on it.

The probability of joint events is considered as the probability of their sum.

The probability of the sum of joint events. Example

The probability of the sum of events A and B, which are joint in relation to each other, is equal to the sum of the probabilities of the event minus the probability of their product (that is, their joint implementation):

R joint. (A + B) \u003d P (A) + P (B) - P (AB)

Assume that the probability of hitting the target with one shot is 0.4. Then event A - hitting the target in the first attempt, B - in the second. These events are joint, since it is possible that it is possible to hit the target both from the first and from the second shot. But the events are not dependent. What is the probability of the event of hitting the target with two shots (at least one)? According to the formula:

0,4+0,4-0,4*0,4=0,64

The answer to the question is: "The probability of hitting the target with two shots is 64%."

This formula for the probability of an event can also be applied to incompatible events, where the probability of the joint occurrence of an event P(AB) = 0. This means that the probability of the sum of incompatible events can be considered a special case of the proposed formula.

Probability geometry for clarity

Interestingly, the probability of the sum of joint events can be represented as two areas A and B that intersect with each other. As you can see from the picture, the area of their union is equal to the total area minus the area of their intersection. This geometric explanation makes the seemingly illogical formula more understandable. Note that geometric solutions are not uncommon in probability theory.

The definition of the probability of the sum of a set (more than two) of joint events is rather cumbersome. To calculate it, you need to use the formulas that are provided for these cases.

Dependent events

Dependent events are called if the occurrence of one (A) of them affects the probability of the occurrence of the other (B). Moreover, the influence of both the occurrence of event A and its non-occurrence is taken into account. Although events are called dependent by definition, only one of them is dependent (B). The usual probability was denoted as P(B) or the probability of independent events. In the case of dependents, a new concept is introduced - the conditional probability P A (B), which is the probability of the dependent event B under the condition that the event A (hypothesis) has occurred, on which it depends.

But event A is also random, so it also has a probability that must and can be taken into account in the calculations. The following example will show how to work with dependent events and a hypothesis.

Example of calculating the probability of dependent events

A good example for calculating dependent events is a standard deck of cards.

On the example of a deck of 36 cards, consider dependent events. It is necessary to determine the probability that the second card drawn from the deck will be a diamond suit, if the first card drawn is:

- Tambourine.

- Another suit.

Obviously, the probability of the second event B depends on the first A. So, if the first option is true, which is 1 card (35) and 1 diamond (8) less in the deck, the probability of event B:

P A (B) \u003d 8 / 35 \u003d 0.23

If the second option is true, then there are 35 cards in the deck, and the total number of tambourines (9) is still preserved, then the probability of the following event is B:

P A (B) \u003d 9/35 \u003d 0.26.

It can be seen that if event A is conditional on the fact that the first card is a diamond, then the probability of event B decreases, and vice versa.

Multiplication of dependent events

Based on the previous chapter, we accept the first event (A) as a fact, but in essence, it has a random character. The probability of this event, namely the extraction of a tambourine from a deck of cards, is equal to:

P(A) = 9/36=1/4

Since theory does not exist on its own, but is called upon to serve in practical purposes, it is fair to note that most often the probability of the product of dependent events is needed.

According to the theorem on the product of probabilities of dependent events, the probability of occurrence of jointly dependent events A and B is equal to the probability of one event A, multiplied by the conditional probability of event B (depending on A):

P (AB) \u003d P (A) * P A (B)

Then in the example with a deck, the probability of drawing two cards with a suit of diamonds is:

9/36*8/35=0.0571 or 5.7%

And the probability of extracting not diamonds at first, and then diamonds, is equal to:

27/36*9/35=0.19 or 19%

It can be seen that the probability of occurrence of event B is greater, provided that a card of a suit other than a diamond is drawn first. This result is quite logical and understandable.

Total probability of an event

When a problem with conditional probabilities becomes multifaceted, it cannot be calculated by conventional methods. When there are more than two hypotheses, namely A1, A2, ..., A n , .. forms a complete group of events under the condition:

- P(A i)>0, i=1,2,…

- A i ∩ A j =Ø,i≠j.

- Σ k A k =Ω.

So, the formula for the total probability for event B with a complete group of random events A1, A2, ..., A n is:

A look into the future

The probability of a random event is essential in many areas of science: econometrics, statistics, physics, etc. Since some processes cannot be described deterministically, since they themselves are probabilistic, special methods of work are needed. The probability of an event theory can be used in any technological field as a way to determine the possibility of an error or malfunction.

It can be said that, by recognizing the probability, we somehow take a theoretical step into the future, looking at it through the prism of formulas.

Plan.

1. Random variable (CV) and the probability of an event.

2. Law of SW distribution.

3. Binomial distribution (Bernoulli distribution).

4. Poisson distribution.

5. Normal (Gaussian) distribution.

6. Uniform distribution.

7. Student's distribution.

2.1 Random variable and event probability

Mathematical statistics is closely related to other mathematical science- the theory of probability and is based on its mathematical apparatus.

Probability theory is a science that studies patterns generated by random events.

Pedagogical phenomena are among the mass ones: they cover large populations of people, are repeated from year to year, and occur continuously. Indicators (parameters, results) of the pedagogical process are of a probabilistic nature: the same pedagogical influence can lead to different consequences (random events, random variables). Nevertheless, with repeated reproduction of conditions, certain consequences appear more often than others - this is the manifestation of the so-called statistical regularities (which are studied by probability theory and mathematical statistics).

Random variable (CV) - this is a numerical characteristic, measured in the course of the experiment and depending on the random outcome. The SW realized in the course of the experiment is itself random. Each RV defines a probability distribution.

main property pedagogical processes, phenomena is their probabilistic nature (under given conditions, they can occur, be realized, but may not occur). For such phenomena, the concept of probability plays an essential role.

Probability (P) shows the degree of possibility of a given event, phenomenon, result. The probability of an impossible event is zero p = 0, reliable - one p = 1 (100%). The probability of any event lies between 0 and 1, depending on how random the event is.

If we are interested in event A, then, most likely, we can observe, fix the facts of its occurrence. The need for the concept of probability and its calculation will obviously arise only when we observe this event not every time, or we realize that it may or may not occur. In both cases, it is useful to use the concept of the frequency of occurrence of an event f(A) - as the ratio of the number of cases of its occurrence (favorable outcomes) to the total number of observations. The frequency of occurrence of a random event depends not only on the degree of randomness of the event itself, but also on the number (number) of observations of this SW.

There are two types of SV samples: dependent And independent. If the results of measuring a certain property in objects of the first sample do not affect the results of measuring this property in objects of the second sample, then such samples are considered independent. When the results of one sample affect the results of another sample, the samples are considered dependent. The classic way to get dependent measurements is to double measure the same property (or different properties) for members of the same group.

Event A does not depend on event B if the probability of event A does not depend on whether or not event B occurred. Events A and B are independent if P(AB)=P(A)P(B). In practice, the independence of the event is established from the conditions of experience, the intuition of the researcher and practice.

CV is discrete (we can number its possible values), for example, the roll of a die = 4, 6, 2, and continuous (its distribution function F(x) is continuous), for example, the life of a light bulb.

Mathematical expectation is a numerical characteristic of SW, approximately equal to the average value of SW:

M(x)=x 1 p 1 +x 2 p 2 +…+x n p n

2.2 Law of SW distribution

Are phenomena that are random in nature subject to any laws? Yes, but these laws are different from what we are used to. physical laws. The values of SW cannot be predicted even under known experimental conditions, we can only indicate the probabilities that SW will take on one or another value. But knowing the probability distribution of SW, we can draw conclusions about the events in which these random variables participate. True, these conclusions will also be of a probabilistic nature.

Let some SW be discrete, i.e. can take only fixed values X i . In this case, a series of probabilities P(X i) for all (i=1…n) admissible values of this quantity is called its distribution law.

The law of distribution of SW is a relation that establishes a relationship between the possible values of SW and the probabilities with which these values are accepted. The distribution law fully characterizes SW.

When constructing a mathematical model to test a statistical hypothesis, it is necessary to introduce a mathematical assumption about the law of SW distribution (parametric way of building a model).

The non-parametric approach to the description of the mathematical model (SW does not have a parametric distribution law) is less accurate, but has a wider scope.

In the same way as for the probability of a random event, there are only two ways to find it for the CV distribution law. Either we build a scheme of a random event and find an analytical expression (formula) for calculating the probability (maybe someone has already done it or will do it before you!), Or we will have to use an experiment and, based on the frequencies of observations, make some assumptions (put forward hypotheses) about the law distribution.

Of course, for each of the "classical" distributions, this work has been done for a long time - widely known and very often used in applied statistics are the binomial and polynomial distributions, the geometric and hypergeometric distributions, the Pascal and Poisson distributions, and many others.

For almost all classical distributions, special statistical tables were immediately constructed and published, refined as the accuracy of the calculations increased. Without the use of many volumes of these tables, without learning the rules for using them, the practical use of statistics has been impossible for the last two centuries.

Today the situation has changed - there is no need to store calculation data using formulas (no matter how complicated the latter are!), The time to use the distribution law for practice is reduced to minutes, or even seconds. Already now there is a sufficient number of various packages of applied computer programs for these purposes.

Among all probability distributions, there are those that are used most often in practice. These distributions have been studied in detail and their properties are well known. Many of these distributions form the basis of entire fields of knowledge, such as queuing theory, reliability theory, quality control, game theory, and so on.

2.3 Binomial distribution (Bernoulli distribution)

It arises in those cases when the question is posed: how many times does an event occur in a series of a certain number of independent observations (experiments) performed under the same conditions.

For convenience and clarity, we will assume that we know the value p - the probability that a visitor entering the store will be a buyer and (1 - p) = q - the probability that a visitor entering the store will not be a buyer.

If X is the number of buyers from total number n visitors, then the probability that there are k buyers among n visitors is

P(X= k) = , where k=0,1,…n (1)

Formula (1) is called the Bernoulli formula. With a large number of trials, the binomial distribution tends to be normal.

2.4 Poisson distribution

It plays an important role in a number of issues in physics, communication theory, reliability theory, queuing theory, etc. Everywhere where a random number of some events (radioactive decays, telephone calls, equipment failures, accidents, etc.) can occur during a certain time.

Consider the most typical situation in which the Poisson distribution occurs. Let some events (shop purchases) occur at random times. Let us determine the number of occurrences of such events in the time interval from 0 to T.

A random number of events that occurred over time from 0 to T is distributed according to the Poisson law with the parameter l=aT, where a>0 is a task parameter that reflects the average frequency of events. The probability of k purchases over a large time interval (for example, a day) will be

P(Z=k) =

(2)

2.5 Normal (Gaussian) distribution

The normal (Gaussian) distribution occupies a central place in the theory and practice of probabilistic-statistical research. As a continuous approximation to binomial distribution it was first considered by A. Moivre in 1733. After some time, the normal distribution was again discovered and studied by K. Gauss (1809) and P. Laplace, who came to the normal function in connection with work on the theory of observational errors.

Continuous random variable X called distributed according to the normal law, if its distribution density is equal to

Where

coincides with the mathematical expectation of X:

=M(X), the parameter s coincides with the standard deviation of X: s =s(X). The graph of the normal distribution function, as can be seen from the figure, has the form of a dome-shaped curve called Gaussian, the maximum point has coordinates (a;

This curve at μ=0, σ=1 received the status of a standard, it is called a unit normal curve, that is, any collected data is sought to be transformed so that their distribution curve is as close as possible to this standard curve.

The normalized curve was invented to solve problems in probability theory, but it turned out in practice that it perfectly approximates the frequency distribution with a large number of observations for many variables. It can be assumed that without material restrictions on the number of objects and the time of the experiment, statistical study reduced to a normal curve.

2.6 Uniform distribution

The uniform probability distribution is the simplest and can be either discrete or continuous. A discrete uniform distribution is such a distribution for which the probability of each of the values of CB is the same, that is:

where N is the number of possible SW values.

The probability distribution of a continuous CB X, taking all its values from the segment [a; b] is called uniform if its probability density on this segment is constant, and outside it is equal to zero:

(5)

2.7 Student's distribution

This distribution is related to the normal distribution. If RV x 1 , x 2 , … x n are independent, and each of them has a standard normal distribution N(0,1), then the SW has a distribution called distribution Student:

probability event combinatorics statistics

Probability theory is a branch of mathematics that studies patterns of random phenomena. Random phenomena are phenomena with an uncertain outcome that occur when a certain set of conditions is repeatedly reproduced. The formation and development of the theory of probability is associated with the names of such great scientists as: Cardano, Pascal, Fermat, Bernoulli, Gauss, Chebyshev, Kalmogorov and many others. The patterns of random phenomena were first discovered in the 16th - 17th centuries. on the example of gambling, similar to the game of dice. The laws of birth and death have also been known for a very long time. For example, is it known that the probability of a newborn being a boy? 0.515. In the 19th and 20th centuries was opened big number laws in physics, chemistry, biology, etc. At present, the methods of probability theory are widely used in various industries natural sciences and technology: in the theory of reliability, the theory of queuing, in theoretical physics, geodesy, astronomy, shooting theory, observation error theory, automatic control theory, general theory communications and in many other theoretical and applied sciences. The theory of probability also serves to substantiate mathematical and applied statistics, which, in turn, is used in the planning and organization of production, in the analysis of technological processes, preventive and acceptance control of product quality, and for many other purposes. IN last years methods of the theory of probability more and more widely penetrate into various areas science and technology, contributing to their progress.

Trial. Event. Event classification

A test is a repeated reproduction of the same set of conditions under which an observation is made. A qualitative test result is an event. Example 1: An urn contains colored balls. One ball is taken from the urn for good luck. Test - extraction of the ball from the urn; Event - the appearance of the ball certain color. A.2: The set of mutually exclusive outcomes of one trial is called the set of elementary events or elementary outcomes. Example 2: A die is tossed once. Test - tossing a bone; Event - loss of a certain number of points. The set of elementary outcomes is (1,2,3,4,5,6). Events are denoted by capital letters of the Latin alphabet: A 1, A 2, ..., A, B, C, ... Observed events (phenomena) can be divided into the following three types: reliable, impossible, random. A. 3: An event is called certain if, as a result of the test, it will definitely occur. A4: An event is said to be impossible if, as a result of the test, it will never occur. A.5: An event is called random if, as a result of the test, it can either occur or not occur. Example 3: Test - the ball is tossed up. Event A = (the ball will fall) - reliable; Event B=(the ball will hang in the air) is impossible; The event C=(the ball will fall on the thrower's head) is random. Random events (phenomena) can be divided into the following types: joint, incompatible, opposite, equally possible. A. 6: Two events are called joint if, in one trial, the occurrence of one of them does not exclude the occurrence of the other. A. 7: Two events are said to be incompatible if, in one trial, the occurrence of one of them excludes the occurrence of the other. Example 4: A coin is tossed twice. Event A - (Emblem dropped for the first time); Event B - (Second coat of arms fell out); Event C - (Heads for the first time). Events A and B are joint, A and C are incompatible. A. 8: Several events form a complete group in a given trial if they are pairwise incompatible and as a result of the trial one of these events is sure to appear. Example 5: A boy tosses a coin into a slot machine. Event A =(boy wins); Event B=(boy won't win); A and B - form a complete group of events. A.9: Two incompatible events that form a complete group are called opposite. The event opposite to event A is denoted. Example 6. One shot is fired at the target. Event A - hit; Event is a miss.